With many companies opting to move to the cloud from their on-premise infrastructure amazon’s S3 is commonly used as a storage layer for existing on-premise data. As the data transfer is done over the internet there is a need to encrypt these files before uploading them to S3.

Before doing further processing on the file data, we must decrypt these encrypted files first. In most cases, the time of file arrival is not fixed and there is a large influx of incoming files, a traditional approach of using EC2 or EMR to execute the job that will continuously poll the S3 bucket looking for new files won’t be a feasible option as it requires EC2 and EMR to run continuously and this will incur more costs and transient EMR/EC2 won’t be a viable option for continuous file ingestions.

The best way to automate this process is by using AWS Lambda. AWS Lambda is a serverless compute which is designed for similar use cases like the one we will be discussing in this blog.

Why AWS Lambda?

Lambda being serverless there is no need to provision or manage servers, it also scales automatically. Lambda provides up to 10Gb of RAM with a max execution time of 15mins. It also provides ephemeral storage of 500MB in /tmp dir and you can even use external libraries in lambda using layers.

Why GNUPG?

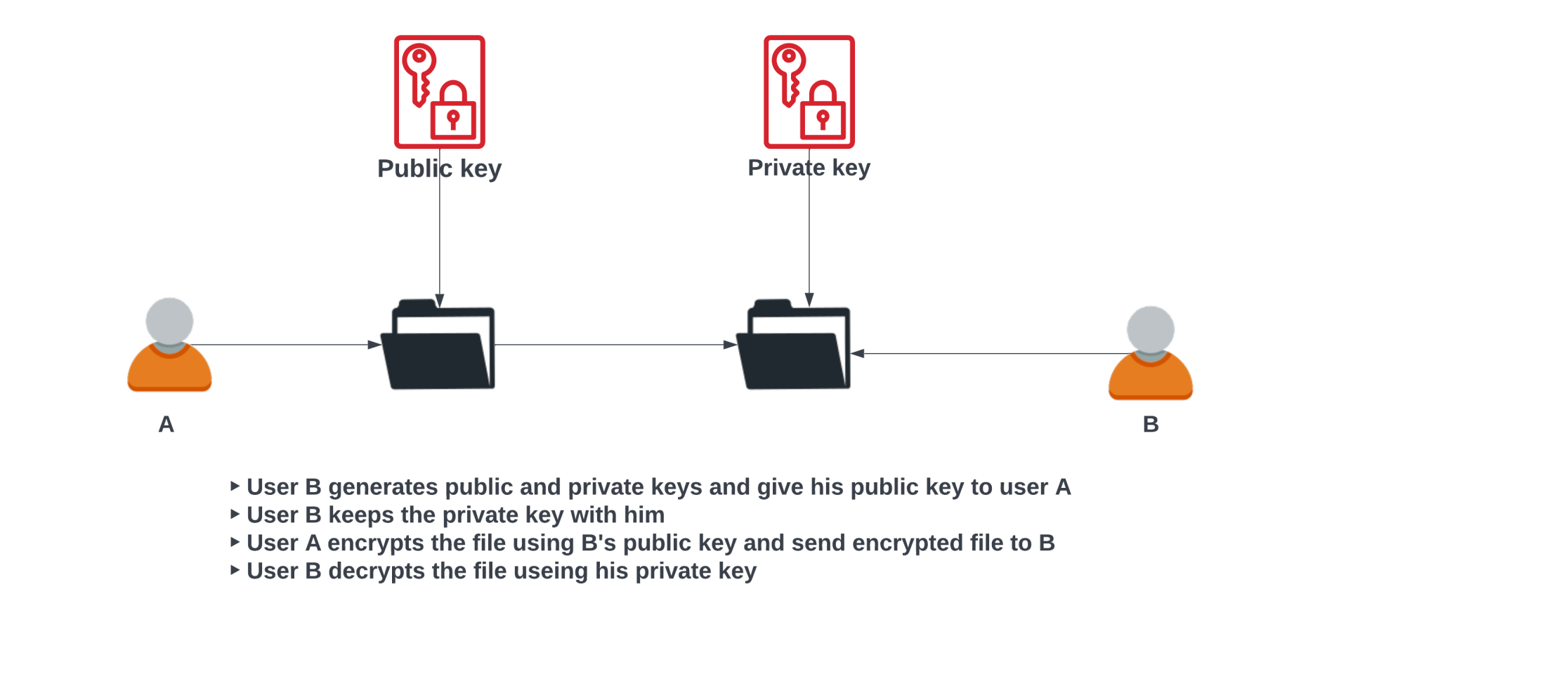

GnuPG commonly called GPG is based on PGP (Pretty Good Privacy) encryption standards which use public and private keys for encryption. It uses a public key to encrypt the data and a private key to decrypt the data, this allows us to encrypt and decrypt data without exchanging private keys.

The below diagram depicts how PGP works:

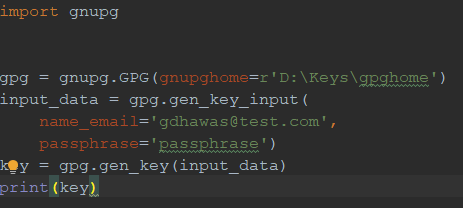

Generate Keys

Using the below code we can generate public and private keys by providing home_dir, email, and passphrase

Python library for GnuPG: pip install python-gnupg

This will create public and private keys under gnupghome directory

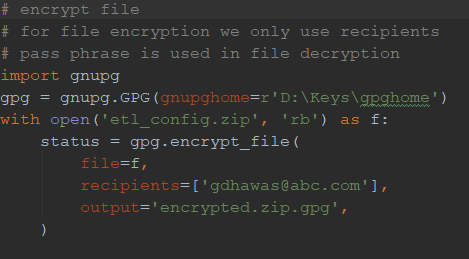

Encrypt File:

Once the keys are generated, the public key will be shared to encrypt the files, create GnuPG home dir and store the public key. For encryption pass the email that is used to generate keys and based on this it will pick the corresponding public key from the home dir.

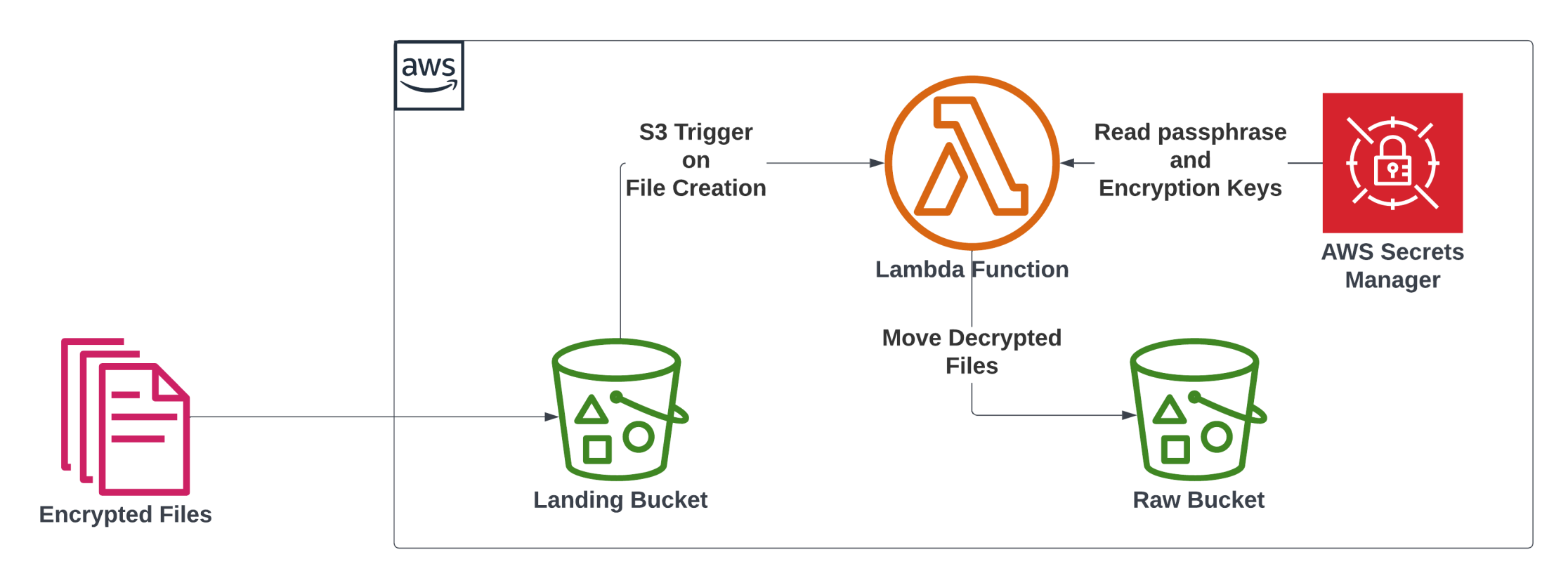

This encrypted file is then transferred to the AWS s3 bucket. Once the file is uploaded to bucket S3 trigger will trigger the corresponding Lambda function which will decrypt the file as shown in the diagram below.

Decrypt File:

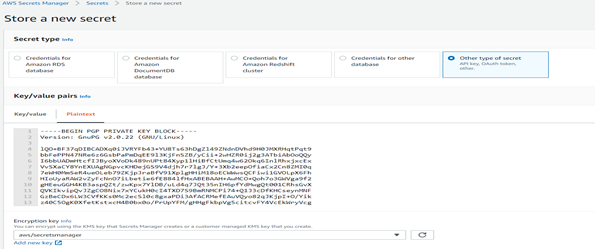

To decrypt the file corresponding private key is required. Store this private key and passphrase in AWS Secret Manages as plaintext as shown in the image below.

For decryption, we have to pass the passphrase and GnuPG home directory and since the S3 bucket cannot be used as a home directory we can make use of the ephemeral storage directory “/tmp” provided by lambda as the home directory for GnuPG.

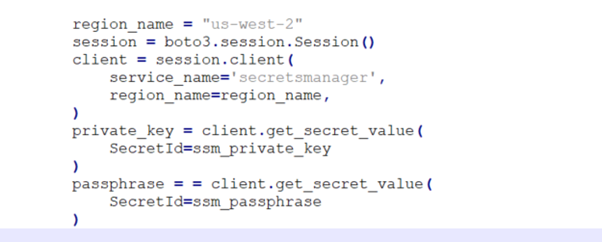

Within lambda code read the private key from Secret Manager and create a file in /tmp dir, set gnupghome to /tmp, and pass the passphrase to decrypt the files as shown in the code below.

If the incoming file size is large and the lambda execution time is going above 15 mins, you can go for AWS glue jobs with a similar approach. AWS Glue also provides ephemeral storage “/tmp” and there is no execution time limit.